There is a lot of talk right now about how AI can be useful in testing. I am a big advocate for the use of AI tools like ChatGPT, Bard, Co-pilot, etc and I will likely write some more about how I see them as useful tools in software development and testing soon. This will be the first in a series of posts focussed on testing AI / Large Language Models (LLM) themselves, rather than using them as tools to support testing.

This series of posts and was inspired by the Ministry of testing 30 days of AI in testing event. I loved what they were doing and decided now was the time I would do something I had been thinking about trying for a while. I wanted to run an LLM locally, and I wanted to learn about writing test automation against it. This first post covers my experience of setting up Llama 2 locally.

Setting up Llama 3 locally

What is Llama 3?

Llama 3 is an open source LLM from Meta. LLM’s are machine learning models that can comprehend and generate human language text. There are various models out there, the most famous probably being OpenAI’s ChatGPT – Which actually is the name of the service, the LLM it uses is either GPT-3.5 (in the free version) or GPT-4 (in the paid for version).

You’d be excused for not realising, but there are literally thousands of LLMs out there with huge ranges in specialist training. Probably the most common are the text generation models, which includes the OpenAI GPT models and Llama 3. But even within text generation you can have specialist training for specific topics, for example Llama 3 has a variant specifically trained for expertise in code.

If you are interested in looking into just how many models and specialism are out there, take a look at huggingface, a platform for collaboration on models, datasets, and applications.

The Hardware

Running an LLM can be expensive. It can require powerful computers with expensive components. However, you can also do it on relatively modest systems, if the load is low. I have tried this on a couple of different systems:

- Dell R710 server – part of my home lab setup. On this machine I ran it in a VM with access to a full Intel Xeon 5560 CPU and 40gb ram – Running with less ram was absolutely possible and did not greatly affect performance.

- Desktop PC – My personal daily driver – Intel i7-8700, 42gb ram, and most importantly, an NVIDIA GPU in the shape of an RTX2060 Super.

Dell Server

I started off on the old Dell server, as I use this for all my virtual machines and general experimentation. I initially followed the Meta guide for getting started. This meant creating a virtual machine running Ubuntu and registering for access to the Llama 3 models.

I won’t go into detail about that process here, as I later found a better setup. However once setup using the guide from Meta, I had a working LLM. The issue was that although it was working, responses to prompts took around 10 minutes for each prompt – ouch! This wasn’t entirely unexpected, due to the older CPU architecture and lack of a GPU in this system, but still painful and would result in slow iteration of my tests.

LLMs run far better on a GPU than they do a CPU. GPUs are designed to handle large amounts of data and matrix calculations quickly, which is exactly what is needed for performant LLMs. CPUs can do these calculations, but far less efficiently. This why Llama 3 works on my server, but not at a usable performance level.

Also, it is worth noting that even though I have a dual CPU server, running a query would only ever use a single CPU (4 cores, 8 threads) no matter how much CPU was assigned to the VM, it would not make use of the second CPU. This obviously impacted performance. Adding a GPU, and/or more powerful CPU would likely help a lot, but given I have a second machine sat next to the server with the required hardware, I switched to that.

GPU Accelerated

The second machine I tried this with is my desktop, which is a Windows machine but has the all-important NVIDIA GPU. Although AMD Radeon GPUs support AI, NVIDIA has much broader support. The GPU in my system (RTX 2060 Super) is far from the latest or greatest, but for my use case, it is plenty. Also worth noting that much older NVIDIA GPUs are supported, so it needn’t be expensive to add a suitable GPU to an existing system.

When setting up Llama 3 on this system, I decided to take a slightly different approach to the configuration. I used a tool called Ollama to setup and run Llama 3 under WSL (Windows Subsystem for Linux). This route worked great, and I could have sensibly paced back and forth conversational interaction with the LLM.

After the success with Ollama on my desktop decided I should go back and try Ollama on my server too. It was much more performant, roughly halving the time it took for responses to be returned. This was still too slow for developing against, but a noteworthy improvement. Realistically I need the faster CPU and the GPU power to get it to run acceptably fast. For the rest of this post, I will be running on the desktop rather than the server.

Configuring an LLM to Run Locally

As I touched on in the hardware section, I ultimately settled on using Ollama with the Llama3-7b-chat model (Llama 3, 7 billion parameters, tailored for chat). I chose this model as it is small while also being a high quality model for experimenting with. I also looked at using other tools, such as privateGPT, which provides a nice GUI and other features. Ultimately though for my purpose I didn’t need all the extras it provided for what I wanted to do, so settled with Ollama.

Installation is straight forward on Linux machines and is best run in WSL on Windows, which is how I am running it. I used a combination of guides to get me started:

NOTE: Since originally publishing this article, Ollama have begun offering public access to Ollama for Windows. This significantly simplifies the installation process on windows making it soe you install the application and then can access Ollama directly from the windows command prompt, rather than via WSL.

- Installing privateGPT in WSL with GPU support – Useful for initial configuration of a WSL environment on Windows and for configuring the NVIDIA drivers.

- Ollama official installation guide for Linux – Once you have your WSL environment setup you can follow Linux instructions. Also worth noting since I started this project there is now a Windows preview version of Ollama, which I haven’t used but could be worth a look.

I’ll cover the main points below:

Setting up WSL

(Windows Users only – Linux Users can skip to next section)

Ensure that WSL is enabled on your machine

- Open the Start menu and type “Windows features” into the search bar and click on “Turn Windows Features On or Off”.

- Ensure the “Windows Subsystem for Linux” checkbox is ticked and press the “OK” button.

- When the operation is complete, you will be asked to restart your computer.

Next, open a privileged command prompt (run as administrator) and type

wsl --installThat should install Ubuntu by default. Should that fail, you may need to specify the version of Linux to install like so

wsl --install -d ubuntuOnce you have Ubuntu installed in WSL, you can start your Ubuntu instance from a command prompt by simply typing wsl.

Preparing Ubuntu for running an LLM

We need to make sure our Ubuntu install is completely up to date, so first we need to run the following commands

sudo apt-get update

sudo apt-get upgradeThis updates the list of available applications and upgrades those already install to the latest versions. There should only be one additional package we need at this point, and that is curl. To install that run

sudo apt-get install curlWith that complete, we should be ready to install Ollama. Note, if you have an NVIDIA GPU installed, then you may also need to install drivers before moving on to the next step. To do so, visit the official NVIDIA drivers website and select

For WSL – Linux > x86_64 > WSL-Ubuntu > 2.0 > deb (network)

For Ubuntu – Linux > x86_64 > Ubuntu > 22.04 > deb (network)

and follow the instructions provided.

Running Ollama

So, what is Ollama? Ollama is a tool that provides a convenient way to get LLMs running locally. On Linux (and WSL) it is very easy to install and get running. Simply run:

curl -fsSL https://ollama.com/install.sh | shand Ollama will be downloaded and installed. Next, we can start Ollama with our choice of model. In this case we will use Llama 3, which by default will download and run the Llama3-7b-chat model.

ollama run llama3The model we are downloading is a little under 4gb, so depending on your internet connection may take a few minutes.

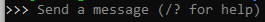

Once complete though you should be presented with a prompt that looks like this:

If so, that’s it, you now have Llama 3 running and you can start testing it!

Summary

In this post I have demonstrated how to run the Llama 3 LLM on both Windows and Ubuntu using Ollama. In the second part of this series of posts I will be sharing how you can leverage the Ollama API with Playwright to begin testing the LLM and will share some areas of consideration when writing tests.

Further reading

If you enjoyed this post then be sure to check out my other posts on AI and Large Language Models.

Subscribe to The Quality Duck

Did you know you can now subscribe to The Quality Duck? Never miss a post by getting them delivered direct to your mailbox whenever I create a new post. Don’t worry, you won’t get flooded with emails, I post at most once a week.

April 4, 2024 at 5:19 am

[[..Pingback..]]

This article was curated as a part of #126th Issue of Software Testing Notes Newsletter.

https://softwaretestingnotes.substack.com/p/issue-126-software-testing-notes

Web: https://softwaretestingnotes.com